Walrus starts to feel fragile in places cryptography never touches.

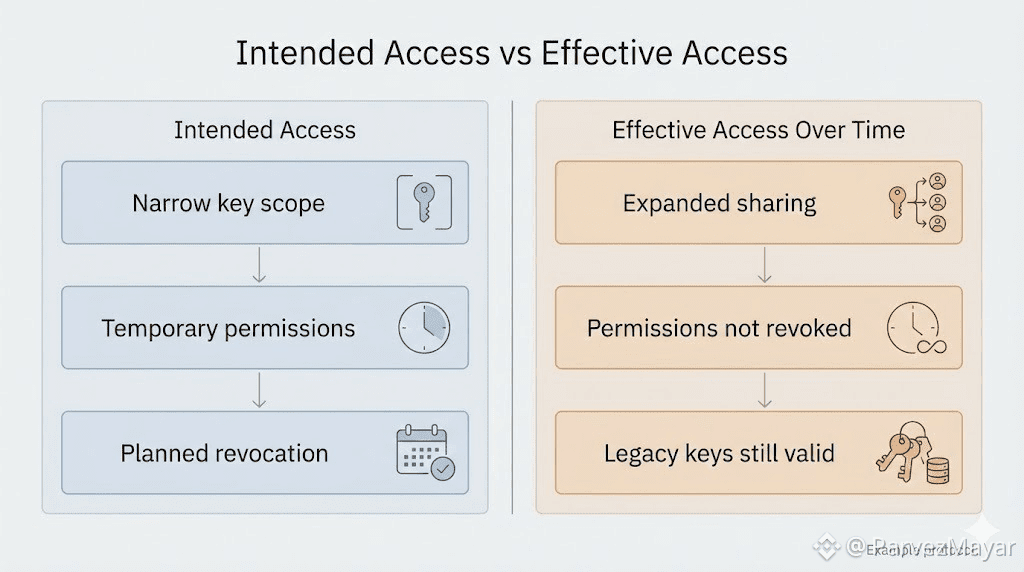

I do not mean broken encryption in storage. I mean the small decisions people make when nothing is technically wrong. The ones that don't look like security decisions at all. A key copied somewhere it does not belong because a deploy is blocked. Access widened because someone needs to unblock a flow and promises to tighten it later. A shortcut taken because the system is behaving correctly, just not quickly enough... because the window is still running and "we'll fix it after" is not a plan in reality.

The ticket doesn't move until someone can answer for that shortcut.

What fails first is not the crypto itself. It's the calendar. Normal deadlines. The kind where nobody is trying to bypass anything... they're trying to get something shipped without reopening a whole thread.

And what breaks isn't the math. It is the assumption that key handling is a setup task instead of an ongoing liability.

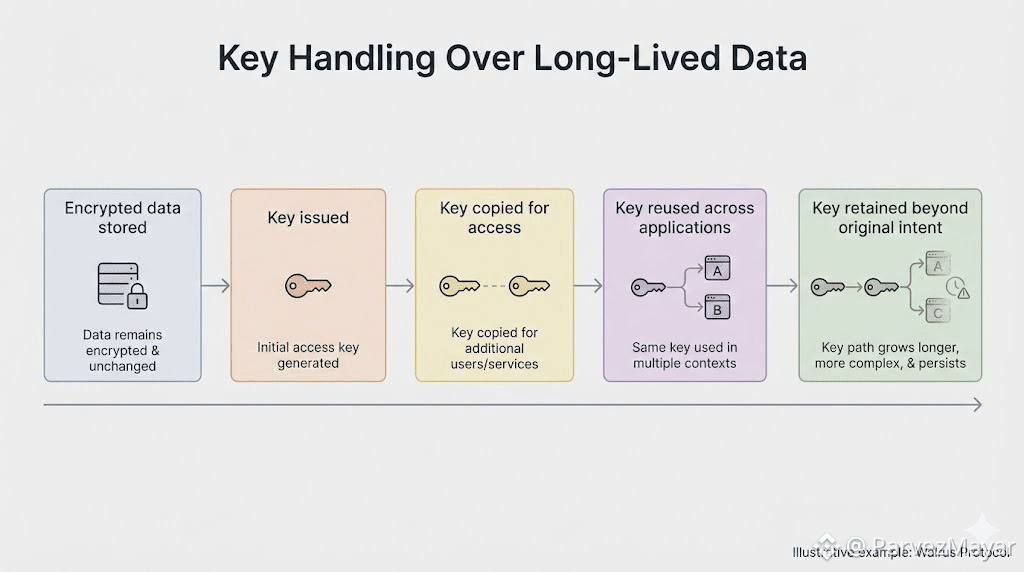

When encrypted blobs live for a long time, move across applications and survive upgrades, keys are no more configuration and start behaving like something that keeps getting handled. They get touched again. Moved again. Reused again. Every touch feels justified in isolation. None of them feel like the moment privacy failed.

Until they age.

The drift starts earlier than a leak. It starts when access expands slightly because revocation would be annoying. When a temporary share becomes a standing permission. When non-custodial storage is still intact... but custody over the keys has already softened quietly, across handoffs.

Walrus Protocol makes sense in this drift, because the data does not evaporate. It stays durable long enough for shortcuts to stack, across terms, across "we'll rotate later' promises. Long lived encrypted objects don't forgive sloppy key paths. They actually remember them well.

@Walrus 🦭/acc shows up when someone asks a question nobody planned for... who can still read this right now and why. Not "in theory"'. Not "by policy." Right now. The answer depends less on the system's constraints and more on a trail of decisions nobody thought they were making.

We have seen teams treat encryption as a finish line. Once data is opaque on the network, attention moves on. But the network is not where most privacy failures happen. They happen closer to the app. Closer to the builder. Closer to the moment where convenience wins a small argument and leaves a permanent mark.

Everything keeps working. Storage still behaves like storage. Walrus' Retrieval still works. The system looks healthy. Privacy degrades without triggering alarms because nothing violates the rules. People do.

Not out of negligence. Out of optimization.

Shortcuts form because friction is expensive. Key rotation is annoying. Revocation breaks flows. Narrow access slows teams down. Under pressure, those costs feel more immediate than abstract privacy guarantees. So behavior adapts. Quietly. The system doesn't object. It can not. It only enforces what it sees.

Walrus makes that drift harder to ignore, because the data doesn't "go away" on its own timeline. It's still there tomorrow. And next week. And the week after maybe, when someone finally asks for the key path like it is an audit artifact, not a setup detail.

Strong encryption plus weak key discipline still fails, just later. Not in a spectacular breach. In the boring way: somebody discovers an old permission that should've died... a key that got copied 'temporarily'. an access scope that never tightened back up.